Kubernetes is an open-source platform designed to automate deploying, scaling, and operating application containers. Imagine you have a fleet of ships (containers) that need to be managed, monitored, and directed efficiently. Kubernetes acts as the ship captain, ensuring everything runs smoothly.

On This Page

Table of Contents

Key Components of Kubernetes

Understanding the core components of Kubernetes is crucial for grasping how it functions:

- Nodes: These are the worker machines, either physical or virtual, where containers run.

- Pods: The smallest and simplest Kubernetes object. A pod represents a single instance of a running process in a cluster.

- Clusters: A set of nodes grouped together. It’s the entire Kubernetes system, comprising multiple nodes.

How Kubernetes Manages Containers

Kubernetes uses a system of nodes, pods, and clusters to manage containers efficiently. Here’s how:

- Scheduling: Kubernetes schedules containers to run on specific nodes based on resource availability.

- Scaling: It can automatically scale up or down the number of container instances based on demand.

- Self-healing: Kubernetes automatically restarts failed containers, replaces containers, and kills containers that don’t respond to health checks.

Consider a food delivery app. Each service—user authentication, order management, delivery tracking—runs in its own container. Kubernetes ensures these containers run efficiently across different nodes, scales them during peak hours, and quickly recovers from any failures to ensure a seamless user experience.

Horizontal Pod Autoscaling (HPA)

Horizontal Pod Autoscaling (HPA) is a powerful feature in Kubernetes that helps manage the number of pods in a deployment based on real-time demand. This ensures that applications can handle varying loads efficiently. Think of it as a smart manager who adds more employees during peak hours and reduces them when it’s quiet. 📈📉

Setting Up HPA

Setting up HPA involves a few steps. Here’s a simplified guide to get you started:

- Ensure your Kubernetes cluster is up and running.

- Install the

metrics-serverto collect resource metrics. - Define an HPA configuration in your deployment file.

Here’s a basic example of an HPA configuration:

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: example-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: example-deployment

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 50

Metrics and Thresholds for Autoscaling

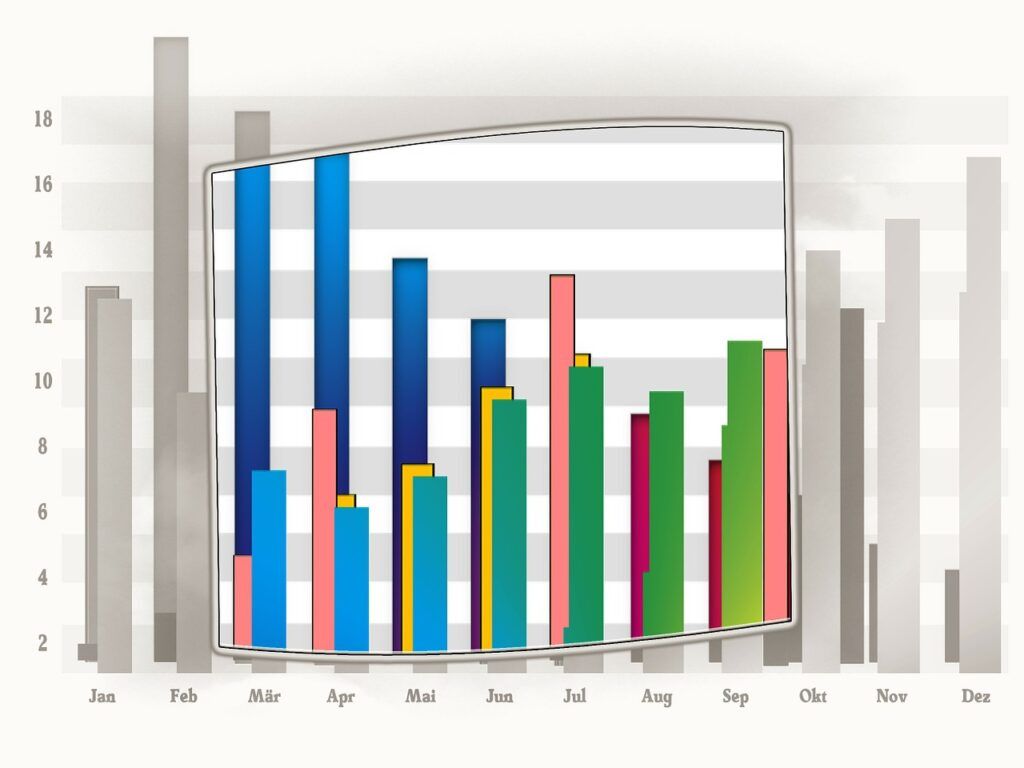

HPA relies on metrics to make scaling decisions. The most common metric is CPU utilization, but it can also use memory usage or custom metrics. You set thresholds to determine when to scale up or down. For example, you might set a CPU utilization target at 50%. If the actual usage exceeds this threshold, HPA will add more pods.

Consider an online store during Black Friday:

- Normal days: 3 pods running

- Black Friday: Traffic spikes, HPA scales up to 10 pods

- After sale: Traffic drops, HPA scales back to 3 pods

Horizontal Pod Autoscaling is essential for maintaining application performance and resource efficiency. By automatically adjusting the number of pods based on metrics, HPA ensures that applications remain responsive under varying loads. It’s a smart solution for managing resources in dynamic environments. 🚀

Vertical Pod Autoscaling (VPA)

Vertical Pod Autoscaling (VPA) is a Kubernetes feature that automatically adjusts the resource limits and requests for containers in pods based on their current needs. This ensures optimal resource utilization and performance. 🌐

How VPA Works

VPA monitors the resource usage of your applications and adjusts the CPU and memory requests accordingly. It operates in three modes:

- Off: Only recommendations are provided without any action.

- Auto: Automatically adjusts the resource requests and limits.

- Initial: Sets the initial resource requests and limits for new pods.

Configuring VPA

To configure VPA, you need to create a VerticalPodAutoscaler resource. Here is an example YAML configuration:

apiVersion: autoscaling.k8s.io/v1

kind: VerticalPodAutoscaler

metadata:

name: my-app-vpa

spec:

targetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

updatePolicy:

updateMode: Auto

Use Cases and Limitations

VPA is particularly useful in the following scenarios:

- Applications with variable resource requirements 🖥️

- Ensuring optimal resource utilization and cost efficiency 💰

- Reducing manual intervention for resource adjustments

However, there are some limitations:

- It might not be suitable for applications with highly static resource requirements.

- There can be temporary disruptions during the rescheduling of pods.

Consider an e-commerce website experiencing fluctuating traffic. During peak hours, VPA can increase the resource allocation to handle more requests, while during off-peak hours, it reduces the resources to save costs. 🛒

What is Cluster Autoscaling?

Cluster autoscaling is a technique used in cloud computing to automatically adjust the size of a cluster based on the current demand. This ensures that you have enough resources to handle your workloads while optimizing cost and performance. Imagine it as a smart thermostat for your cloud resources, adjusting the capacity as needed.

How Cluster Autoscaler Works

The cluster autoscaler monitors the resource utilization within your cluster. When it detects that the current resources are insufficient to handle the workloads, it scales up by adding more nodes. Conversely, when there is excess capacity, it scales down by removing unnecessary nodes. This dynamic adjustment helps maintain optimal performance and cost-efficiency.

Configuring Cluster Autoscaler

Configuring the cluster autoscaler involves setting specific parameters and policies. Here are some key steps:

- Define the minimum and maximum number of nodes.

- Set scaling policies based on CPU, memory, or custom metrics.

- Enable automatic node provisioning and de-provisioning.

For example, in a Kubernetes environment, you can configure the autoscaler using a YAML file. Below is a simple example:

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: example-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: example-deployment

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 50

Best Practices for Cluster Autoscaling

To ensure efficient and reliable autoscaling, consider these best practices:

- Monitor and Log: Continuously monitor resource utilization and log autoscaling events to understand patterns and make informed decisions.

- Test Scenarios: Simulate different load scenarios to verify that your autoscaler responds correctly.

- Set Realistic Limits: Define reasonable minimum and maximum node limits to prevent over-provisioning or under-provisioning.

- Optimize Node Size: Choose appropriate node sizes that fit your workload requirements.

Consider an e-commerce website that experiences fluctuating traffic. During peak shopping seasons, the traffic spikes, requiring more resources to handle the increased demand. With cluster autoscaling, the cluster automatically scales up to accommodate the surge, ensuring a smooth shopping experience for users. Once the traffic returns to normal levels, the cluster scales down, saving costs on unused resources.

Resource Management in Kubernetes

Kubernetes, often referred to as K8s, is a powerful system for managing containerized applications. Effective resource management in Kubernetes ensures that applications have the resources they need while optimizing overall usage.

Setting Resource Requests and Limits

In Kubernetes, resource requests and limits are essential for ensuring that applications run smoothly without overconsuming resources. Here’s a simple YAML example:

apiVersion: v1

kind: Pod

metadata:

name: example-pod

spec:

containers:

- name: example-container

image: nginx

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

📝 Resource Requests: The minimum amount of resources guaranteed for a container.

📝 Resource Limits: The maximum amount of resources a container can consume.

Managing Resource Quotas

Resource quotas help ensure that a single namespace does not consume more than its fair share of resources. This is critical in multi-tenant environments. Below is an example of a resource quota:

apiVersion: v1

kind: ResourceQuota

metadata:

name: example-quota

namespace: example-namespace

spec:

hard:

pods: "10"

requests.cpu: "4"

requests.memory: "16Gi"

limits.cpu: "8"

limits.memory: "32Gi"

📊 Resource Quota: A set limit on the resources that can be consumed by a namespace.

Strategies for Optimizing Resource Utilization

To optimize resource utilization, consider the following strategies:

- Right-sizing: Regularly monitor and adjust resource requests and limits based on application performance metrics.

- Horizontal Pod Autoscaling: Automatically scale your pods based on CPU or memory usage.

- Vertical Pod Autoscaling: Adjust the resource requests and limits of running pods to better match their actual usage.

💡 In a production environment, a company might use Horizontal Pod Autoscaling to handle increased traffic during peak hours, ensuring smooth performance without manual intervention.

Load Balancing in Kubernetes

Load balancing is a critical component in Kubernetes that ensures your application can handle traffic effectively. 🏗️ In simple terms, load balancing distributes incoming network traffic across multiple servers, ensuring no single server becomes a bottleneck. This is crucial for maintaining the availability and performance of your applications.

Types of Load Balancers in Kubernetes

There are several types of load balancers you can use in Kubernetes:

- Internal Load Balancers: Used for distributing traffic within the cluster.

- External Load Balancers: Distributes external traffic to the services in your cluster.

- Ingress Controllers: Manages external access to services, typically HTTP and HTTPS.

Here’s a brief comparison:

| Type | Purpose |

|---|---|

| Internal | Distributes internal traffic |

| External | Handles external traffic |

| Ingress | Manages external HTTP/HTTPS access |

Configuring Ingress Controllers

To configure an Ingress Controller in Kubernetes, follow these steps:

- Install an Ingress Controller (e.g., Nginx, Traefik).

- Create an Ingress resource specifying the routing rules.

- Apply the Ingress resource using

kubectl apply -f ingress.yaml.

Here is a simple example of an Ingress resource:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

spec:

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: example-service

port:

number: 80

Best Practices for Load Balancing

To ensure effective load balancing, consider these best practices:

- Regularly monitor your load balancers and traffic patterns.

- Ensure redundancy by deploying multiple load balancers.

- Optimize your cluster’s resource allocation to prevent overloading.

Implementing these practices will help maintain the robustness and efficiency of your applications.

Consider an e-commerce website experiencing fluctuating traffic. By using Kubernetes load balancing, the site can distribute incoming user requests across multiple servers. This not only improves performance but also ensures high availability, even during peak shopping seasons. 🛒

Monitoring and Logging

Effective management of Kubernetes clusters relies heavily on the utilization of monitoring and logging mechanisms. These tools facilitate the smooth operation of your applications and expedite issue resolution.

Tools for Monitoring Kubernetes

Several tools make monitoring Kubernetes a breeze. Here are some popular choices:

- Prometheus: An open-source system monitoring and alerting toolkit.

- Grafana: A multi-platform analytics tool that works well with Prometheus.

- ELK Stack: Elasticsearch, Logstash, and Kibana for log management and visualization.

These tools help provide real-time insights into your cluster’s performance, helping you make informed decisions. 📊

Implementing Effective Logging

Logging in Kubernetes can help you understand what happened, when, and why. Here are some tips for effective logging:

- Centralized Logging: Collect logs from all nodes and containers into a central system.

- Log Rotation: Regularly archive logs to manage storage efficiently.

- Structured Logs: Use structured formats like JSON for easier parsing and analysis.

For example, you can use Fluentd to collect, process, and forward logs. This helps in gaining a clearer picture of what’s happening inside your Kubernetes cluster. 🔍

Analyzing Metrics for Better Scaling Decisions

Metrics analysis is crucial for scaling your applications effectively. By monitoring key metrics, you can make informed decisions about scaling up or down. Key metrics include:

- CPU and Memory Usage: Monitor resource consumption to avoid bottlenecks.

- Pod Availability: Ensure your pods are running as expected.

- Response Times: Track how quickly your services are responding.

Using tools like Prometheus and Grafana can help visualize these metrics, making it easier to understand your cluster’s needs. For example, if you notice high CPU usage, you might decide to scale up your pods to handle the load better. 📈

Deploying and Managing Stateful Applications

Deploying and managing stateful applications in Kubernetes can be challenging. Unlike stateless applications, stateful applications require persistent storage and need to maintain consistent state across restarts and scaling events.

Challenges of Stateful Applications

Stateful applications face several challenges when deployed in Kubernetes:

- Persistent Storage: Ensuring data is not lost when pods are restarted or rescheduled.

- Networking: Maintaining stable network identities for pods.

- Scaling: Scaling stateful applications without losing data consistency.

Techniques for Scaling Stateful Applications

Scaling stateful applications require special techniques to ensure data integrity and consistency:

- StatefulSets: Kubernetes provides StatefulSets, which manage the deployment and scaling of a set of pods and provides guarantees about the ordering and uniqueness of these pods.

- Persistent Volume Claims (PVC): PVCs are used to request storage resources for stateful applications.

- Headless Services: Headless services can be used to provide stable network identities for pods.

Case Studies

Let’s look at some real-life examples:

Example 1: Running a Database

Databases like MySQL or PostgreSQL are stateful applications. Using StatefulSets, you can ensure that each database pod has a persistent volume, ensuring data is not lost during restarts.

Example 2: Message Queues

Applications like Kafka or RabbitMQ require persistent storage for message data. By using StatefulSets and PVCs, you can scale these applications while maintaining data integrity.

Here is a simple YAML configuration for deploying a MySQL database using StatefulSets:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

serviceName: "mysql"

replicas: 3

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:5.7

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumeClaimTemplates:

- metadata:

name: mysql-persistent-storage

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

Deploying and managing stateful applications in Kubernetes requires a deep understanding of Kubernetes concepts like StatefulSets, PVCs, and headless services.

Security Considerations in Scaling

Scaling in Kubernetes is crucial for managing workloads efficiently as your applications grow. However, scaling introduces various security considerations that must be addressed to ensure your system remains robust and secure.

Role-Based Access Control (RBAC) and Policies

Implementing Role-Based Access Control (RBAC) is essential for managing permissions and ensuring that only authorized users can access certain resources. Here are some best practices for using RBAC:

- 🌟 Principle of Least Privilege: Assign only the necessary permissions required for a role.

- 🔐 Regular Audits: Periodically review and update roles and permissions to match changing requirements.

- 🛡️ Use Namespaces: Organize resources using namespaces and apply policies at the namespace level for better control.

Best Practices for Secure Scaling

When scaling Kubernetes, consider the following best practices to ensure security:

- 🔒 Network Policies: Define network policies to control traffic between pods and restrict access to sensitive data.

- 📊 Monitoring and Logging: Implement monitoring and logging to detect and respond to potential threats quickly.

- 📜 Immutable Infrastructure: Use immutable infrastructure to reduce the risk of unauthorized changes.

Consider a scenario where an e-commerce platform experiences a significant increase in traffic during a holiday sale. To handle the load, the platform’s Kubernetes cluster needs to scale quickly:

- 🚀 Auto-scaling: Use Kubernetes’ auto-scaling features to add more instances automatically.

- 🔍 Security Checks: Perform security checks on the new instances to ensure they comply with security policies.

- 📈 Continuous Monitoring: Continuously monitor the new instances to detect any anomalies or breaches in real-time.

Disaster Recovery and High Availability

In today’s digital age, ensuring high availability and implementing robust disaster recovery plans are critical for any application running on Kubernetes. High availability ensures that your services remain accessible even during failures, while disaster recovery focuses on restoring services after a catastrophic event. Let’s dive into the strategies and examples to better understand these concepts.

Strategies for Ensuring High Availability in Kubernetes

Kubernetes offers several built-in features to ensure high availability:

- ReplicaSets: Ensure that a specified number of pod replicas are running at all times.

- Load Balancers: Distribute traffic across multiple pods to prevent any single pod from becoming a bottleneck.

- Self-healing: Automatically replaces failed pods, maintaining the desired state of the application.

Example:

Imagine you have a web application deployed with three replicas. If one pod fails, Kubernetes will automatically spin up a new pod to replace it, ensuring zero downtime for users.

Implementing Disaster Recovery Plans in Kubernetes

Disaster recovery in Kubernetes involves:

- Regular Backups: Consistent snapshots of your data and configurations.

- Multi-region Deployments: Spreading your application across multiple regions to mitigate regional failures.

- Automated Failover: Switching to a backup system without manual intervention.

Example:

For a critical database, you can set up automated backups stored in a different region. In a disaster scenario, you can quickly restore from these backups, minimizing data loss.

Wrap UP

High availability and disaster recovery are essential components of a resilient Kubernetes environment. By leveraging Kubernetes’ features and adopting best practices, organizations can ensure their services remain up and running, even in the face of unexpected failures.

Scaling applications with Kubernetes involves a combination of strategic planning, effective resource management, and leveraging Kubernetes’ powerful features like autoscaling and load balancing.

😊 Happy Deploying!

FAQs

What are resource requests and limits in Kubernetes, and why are they important?

Resource requests specify the minimum amount of CPU and memory a container needs, while limits define the maximum amount a container can use. Setting these parameters helps ensure that your applications have the resources they need to run efficiently and that no single container can monopolize the cluster’s resources.

How can I ensure efficient load balancing in Kubernetes?

Efficient load balancing can be achieved by using Kubernetes services and Ingress controllers. Services provide load balancing across pods, while Ingress controllers manage external access to the cluster and can route traffic based on various rules. Proper configuration and choosing the right type of load balancer are key to efficient load balancing.

How do I handle security when scaling applications in Kubernetes?

Security considerations include ensuring proper Role-Based Access Control (RBAC) configurations, using network policies to control traffic, and regularly updating and patching your Kubernetes components. It’s also important to use secrets management and to follow best practices for container security.

What strategies can I use for disaster recovery and high availability in Kubernetes?

Strategies for disaster recovery and high availability include using multiple clusters in different regions, setting up automated backups, and implementing failover mechanisms. Ensuring that your applications are stateless or using StatefulSets with persistent storage can also enhance availability and recoverability.

What are the common challenges in scaling applications with Kubernetes?

Common challenges include managing resource contention, ensuring network performance, handling stateful applications, configuring autoscalers correctly, and maintaining security and compliance. Proper planning, monitoring, and continuous improvement are essential to address these challenges effectively.