ETL testing, short for Extract, Transform, Load testing, is a crucial process in data warehousing and data integration projects. It ensures that data is correctly extracted from source systems, accurately transformed into a suitable format, and loaded into the target system without any loss of data or integrity issues.

ETL testing helps identify and rectify data issues early in the data pipeline, ensuring reliable and consistent data for business intelligence and analytics.

On This Page

Table of Contents

Key Components of ETL Testing

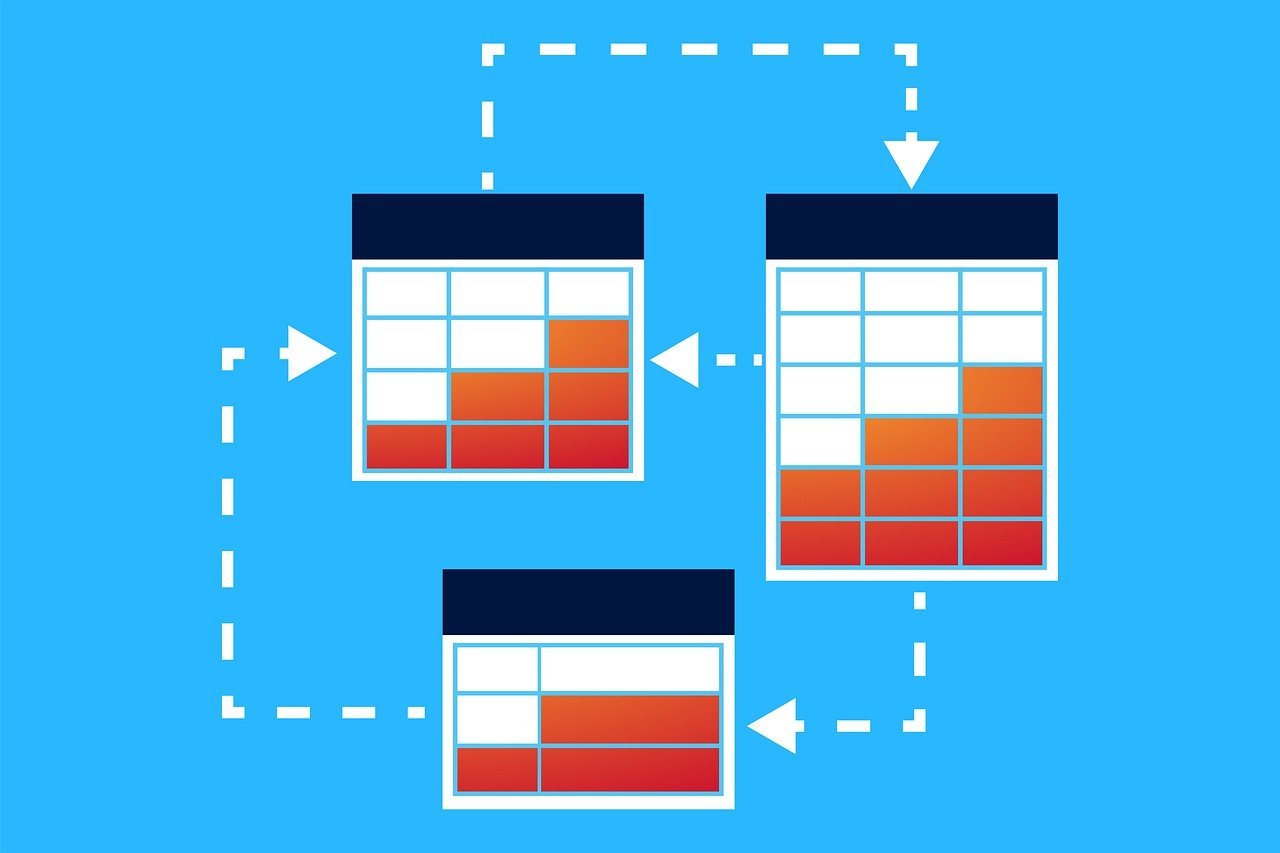

ETL testing is broken down into three primary components:

- Extract: This phase involves pulling data from various source systems. It could be databases, flat files, or any other data storage.

- Transform: The extracted data is then transformed into a suitable format. This may include data cleaning, filtering, aggregation, and applying business rules.

- Load: Finally, the transformed data is loaded into the target data warehouse or another storage system.

Purpose of ETL Testing

ETL testing aims to:

- Ensure data accuracy and consistency between source and target systems

- Verify data transformations are correctly applied

- Check data completeness and ensure no data loss

- Validate performance and scalability of the ETL process

Imagine a retail company that wants to analyze its sales data. The sales data is stored in different databases across various store locations. The ETL process will:

- Extract sales data from all store databases

- Transform the data to a uniform format, apply business rules like calculating total sales per day, and clean any anomalies

- Load the transformed data into a central data warehouse for reporting and analysis

ETL Testing in Action

Below is a simple coding example to illustrate a basic ETL process:

-- Extract

SELECT * FROM source_table;

-- Transform

SELECT col1, col2, (col3 * 1.1) AS transformed_col3

FROM source_table;

-- Load

INSERT INTO target_table (col1, col2, transformed_col3)

SELECT col1, col2, (col3 * 1.1)

FROM source_table;

In this example, data is extracted from ‘source_table‘, transformed by applying a 10% increase to ‘col3’, and then loaded into ‘target_table‘.

ETL testing ensures that each step of this process is executed correctly and data integrity is maintained.

Importance of ETL Testing

The primary goal of ETL testing is to ensure data accuracy, maintain data integrity, and comply with regulatory requirements. Let’s explore why ETL testing is indispensable for businesses.

| Aspect | Description |

|---|---|

| Ensuring Data Accuracy | Identifies and rectifies data discrepancies to prevent financial losses or strategic missteps. |

| Maintaining Data Integrity | Verifies correct data transformations to maintain consistency and reliability, avoiding data loss or corruption. |

| Compliance and Regulatory Requirements | Ensures data processing aligns with regulations to avoid legal penalties and maintain customer trust. |

| Enhancing Data Quality | Identifies and corrects errors, inconsistencies, and duplicates to provide reliable data for analytics and reporting. |

Ensuring Data Accuracy

- Accurate data is essential for informed business decisions.

- Inaccurate data can lead to incorrect conclusions, financial losses, or strategic missteps.

- Example: A retail company relying on sales data for inventory forecasting could face overstocking or stockouts due to data inaccuracies.

- ETL testing helps identify and rectify data discrepancies before they impact the business.

Maintaining Data Integrity

- Data integrity ensures consistency and reliability throughout the data lifecycle.

- ETL testing verifies correct data transformations, preventing loss or corruption.

- Example: In healthcare, maintaining the integrity of patient records is crucial to avoid incorrect treatment plans.

- ETL testing is vital for maintaining data integrity and reliability.

Compliance and Regulatory Requirements

- ETL testing ensures compliance with regulatory requirements, avoiding legal penalties.

- Example: Financial institutions must comply with regulations like GDPR and SOX.

- ETL testing helps verify that data handling meets regulatory standards, safeguarding sensitive information.

Enhancing Data Quality

- High-quality data is crucial for successful business operations.

- ETL testing identifies and corrects errors, inconsistencies, and duplicates.

- Reliable data enhances analytics and reporting accuracy.

- Example: Marketing teams can create more targeted campaigns with high-quality customer data, leading to better engagement and higher conversion rates.

Types of ETL Testing

ETL (Extract, Transform, Load) testing is crucial in ensuring that data is accurately and efficiently moved from various sources to a data warehouse. Lets explore different types of ETL testing alongwith simple examples.

Data Completeness Testing

Data completeness testing ensures that all expected data is loaded into the target system. Imagine you are transferring customer records from one database to another. You must check if all customer records are present in the target database.

Example:

| Source Database | Target Database |

|---|---|

| 1000 records | 1000 records |

Data Transformation Testing

Data transformation testing verifies that data transformations are correctly applied. For instance, if you need to convert all dates to a specific format, you must ensure the transformation rules are correctly implemented.

Example: Converting date format from MM/DD/YYYY to YYYY-MM-DD.

SELECT CONVERT(VARCHAR, GETDATE(), 23) AS DateFormatted;

Data Quality Testing

Data quality testing checks for data accuracy, consistency, and integrity. This involves verifying data against predefined rules and constraints to ensure it meets quality standards.

Example: Checking for null values in a column where data is mandatory.

SELECT * FROM Customers WHERE Email IS NULL;

Performance Testing

Performance testing ensures that the ETL process completes within the expected time frame, especially with large data volumes. This is crucial for maintaining efficient data workflows.

Example: Measuring the time taken to load a million records.

Data Integration Testing

Data integration testing checks if data from different sources are correctly integrated into the target system. For example, integrating customer data from CRM and sales data from ERP systems.

Regression Testing

Regression testing ensures that new changes or updates do not adversely affect the existing ETL processes. This is important for maintaining system stability over time.

Example: After updating the ETL script, ensure previous transformations still work correctly.

ETL Testing Process

ETL (Extract, Transform, Load) testing ensures the data integrity during the data migration from various sources to a data warehouse. This process is crucial for maintaining data accuracy and consistency. Let’s dive into the steps involved in the ETL testing process.

Requirement Gathering

Requirement gathering is the first step in ETL testing. It involves understanding the data sources, the business needs, and the expected output. Clear communication with stakeholders is essential during this phase. For example, if a company wants to migrate sales data from multiple regions, the requirements would include the types of data, the sources, and any specific transformations needed.

Test Planning and Strategy

Test planning involves defining the scope, objectives, resources, and timelines for the ETL testing process. A well-defined strategy helps in executing the tests efficiently. This phase also includes risk assessment and mitigation plans. Here’s a simple table to illustrate:

| Activity | Details |

|---|---|

| Scope | Data migration from CRM to Data Warehouse |

| Objectives | Ensure data accuracy and completeness |

| Resources | 5 Test Analyst, 1 Test Lead |

| Timeline | 4 Weeks |

Test Case Design

Designing test cases involves creating detailed test scenarios and scripts to validate the ETL process. Each test case should cover different aspects like data extraction, transformation rules, and loading criteria. For instance, a test case might include validating the transformation of date formats from ‘MM/DD/YYYY’ to ‘YYYY-MM-DD’.

Test Environment Setup

Setting up a test environment that mirrors the production environment is crucial for accurate testing. This includes configuring the hardware, software, and network settings. For example, if the ETL process uses a specific database version, the test environment should have the same version installed.

Test Execution

During this phase, the actual ETL tests are executed. Testers run the test cases and compare the results with expected outcomes. Any discrepancies are noted for further analysis. Here’s a simple Python code snippet for validating data transformation:

import pandas as pd

df = pd.read_csv('source_data.csv')

df['date'] = pd.to_datetime(df['date'], format='%m/%d/%Y')

df.to_csv('transformed_data.csv', index=False)

Defect Logging and Reporting

Any defects or discrepancies found during test execution are logged and reported. This helps in tracking and resolving issues promptly. Tools like JIRA or Bugzilla are commonly used for this purpose. For example, if a data mismatch is found, it is logged with details like defect ID, severity, and steps to reproduce.

Test Closure

The final phase involves preparing test closure reports and obtaining sign-off from stakeholders. This includes summarizing the testing activities, outcomes, and lessons learned. A well-documented closure report ensures that all aspects of the ETL testing process are covered and helps in future projects.

Common ETL Testing Challenges

Handling Large Volumes of Data

One of the significant challenges in ETL testing is managing large volumes of data. When dealing with massive datasets, it becomes difficult to ensure performance and accuracy. A common approach is to use data sampling techniques and leverage parallel processing to make the task manageable.

Dealing with Complex Transformations

ETL processes often involve complex data transformations, which can be challenging to test. For example, transforming nested JSON data into a relational database format requires meticulous testing to ensure all data is accurately transformed. Implementing unit tests for individual transformation functions can help mitigate these complexities.

Ensuring Data Consistency

Data consistency is crucial for reliable ETL processes. Inconsistent data can lead to incorrect business decisions. To tackle this, validation rules and checks are often put in place. For example, ensuring that all date fields are in the same format or that numerical values fall within expected ranges.

Managing Test Data

Creating and managing test data that accurately reflects real-world scenarios is another challenge in ETL testing. Using synthetic data generators or anonymizing production data are common practices to create realistic test datasets. This ensures that test scenarios are as close to actual conditions as possible.

Automating ETL Tests

Automation can significantly enhance the efficiency of ETL testing. Automated tests can run more frequently and consistently than manual tests, catching issues early in the development cycle. Tools like Apache NiFi, Talend, and Informatica offer built-in automation capabilities that can streamline the testing process.

ETL Testing Tools

ETL (Extract, Transform, Load) testing tools play a crucial role in data management by ensuring the accuracy and integrity of data as it moves through different stages. Lets explore some popular ETL testing tools, compare them, and discuss criteria for selecting the most suitable one for your needs.

Popular ETL Testing Tools

Here are some of the most widely used ETL testing tools:

- Informatica Data Validation – Known for its robust data validation capabilities.

- QuerySurge – A tool that automates data testing and validation.

- Talend Open Studio – An open-source tool offering extensive ETL functionalities.

- Datagaps ETL Validator – Focuses on data quality and integrity.

Comparison of ETL Testing Tools

Let’s compare these tools based on some key features:

| Tool | Key Feature | Pro | Con |

|---|---|---|---|

| Informatica Data Validation | Data Validation | Comprehensive validation | High cost |

| QuerySurge | Automation | Automated testing | Steep learning curve |

| Talend Open Studio | Open-Source | Free to use | Limited support |

| Datagaps ETL Validator | Data Quality | Focus on data integrity | Requires customization |

Criteria for Selecting an ETL Testing Tool

When choosing an ETL testing tool, consider the following criteria:

- Scalability – Can the tool handle increasing data volumes?

- Cost – Does the tool fit within your budget?

- Ease of Use – Is the tool user-friendly?

- Support and Documentation – Are there adequate resources and support available?

For example, if you are a small business with limited funds, Talend Open Studio might be a suitable choice due to its open-source nature. On the other hand, larger enterprises might prefer Informatica Data Validation for its comprehensive capabilities despite its higher cost.

Best Practices for ETL Testing

Early Involvement in the ETL Process

The key to successful ETL testing starts with early involvement in the ETL process. Engaging QA from the outset ensures that potential issues are identified early, reducing the risk of costly errors later on.

Comprehensive Test Planning

A thorough test plan is critical for effective ETL testing. This plan should include:

- Data validation checks

- Data transformation validation

- Performance testing

- Security testing

Creating detailed test plans helps in covering all aspects of the ETL process, ensuring that data integrity and quality are maintained throughout.

Automated Testing

Automated testing tools can significantly enhance the efficiency and accuracy of ETL testing. By automating repetitive tasks, QA can focus on more complex scenarios. Popular tools like Apache Nifi, Talend, and Informatica can be used to automate ETL testing processes, ensuring consistent and reliable results.

Continuous Monitoring and Validation

Continuous monitoring and validation are essential for maintaining data quality over time. Implementing real-time monitoring tools can help identify issues as they arise, allowing for prompt resolution. Regular data validation checks ensure that the ETL process consistently produces accurate and reliable data. 📊

Collaboration with Development Teams

Effective collaboration between QA and development teams is crucial for successful ETL testing. Regular communication and feedback loops help in identifying and resolving issues quickly. By working together, teams can ensure that the ETL process meets all business requirements and delivers high-quality data.

FAQs

How can I ensure the success of my ETL Testing process?

To ensure successful ETL Testing, you should:

~ Get involved early in the ETL process

~ Develop a comprehensive test plan

~ Utilize automated testing tools

~ Continuously monitor and validate data

~ Collaborate closely with development and business teams

Can ETL Testing be automated?

Yes, ETL Testing can be automated using tools like Selenium, QuerySurge, and Informatica Data Validation. Automation helps improve efficiency, accuracy, and coverage of tests.

How often should ETL Testing be performed?

ETL Testing should be performed regularly, particularly after any changes to source data, ETL processes, or the target data warehouse. Continuous testing is ideal to ensure ongoing data integrity and quality.

What is the difference between ETL Testing and Data Warehouse Testing?

ETL Testing focuses on the correctness of the ETL processes (extracting, transforming, and loading data), while Data Warehouse Testing encompasses a broader scope, including data warehouse architecture, schema, integration, and performance.

How do I handle large volumes of data during ETL Testing?

Handling large volumes of data can be managed through:Data sampling techniques

Parallel processing

Incremental testing

Efficient use of ETL testing tools that support high-volume data testing