Introduction: The Unseen Language of Data

In this era, data is king. But how we write, store, and transmit that data is a story of constant evolution. For over two decades, one format has reigned supreme: JSON (JavaScript Object Notation). It’s the universal language of the web, the backbone of countless APIs, and a familiar sight in almost every developer’s code editor.

However, a new technological wave is reshaping our digital landscape: Artificial Intelligence. With the rise of Large Language Models (LLMs) and complex AI systems, our old ways of handling data are starting to show their age. The very features that made JSON a champion for human-machine interaction are now becoming bottlenecks for machine-machine communication.

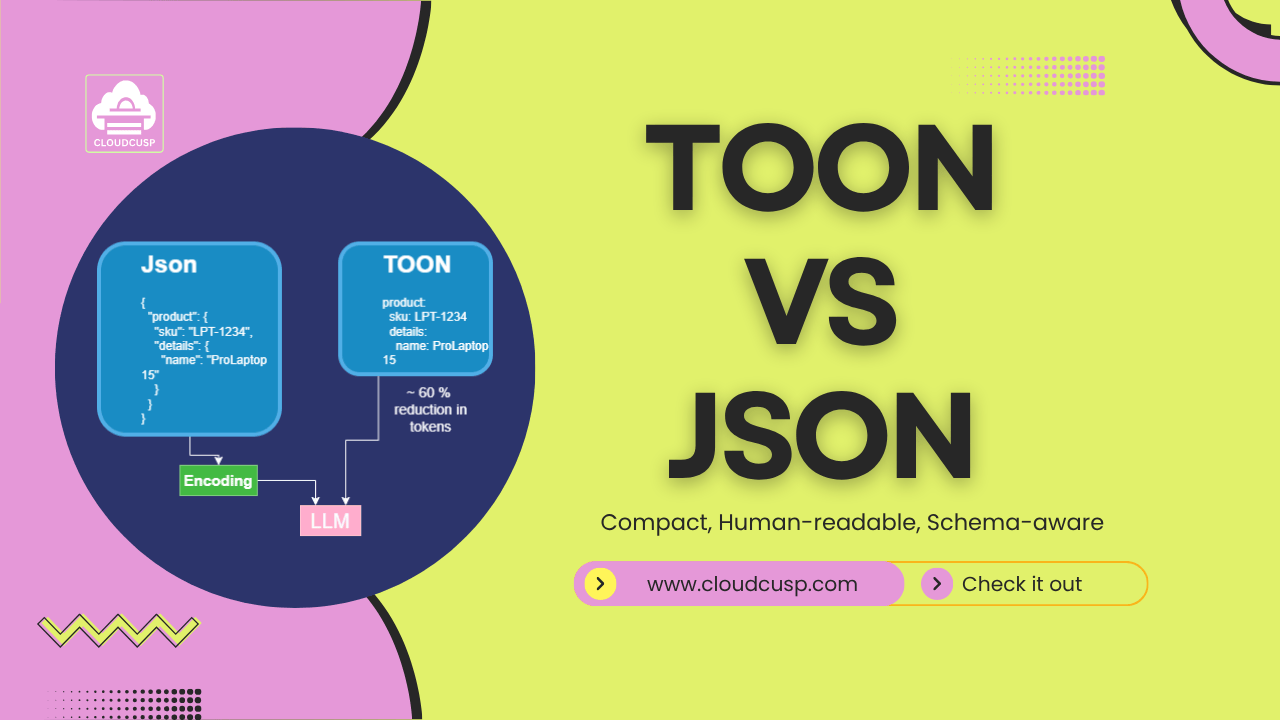

This is where a new contender emerges: TOON (Token-Oriented Object Notation). As its name suggests, TOON is not just another data format. It’s a format purpose-built from the ground up for the AI era. It’s designed to speak the language that AI models understand best: the language of tokens.

This article will dive deep into the world of data formats. We’ll explore why JSON became so dominant, where it falls short in the context of AI, and how TOON aims to solve these very specific problems, saving developers significant costs along the way. We’ll compare them head-to-head, look at practical examples, and discuss whether TOON has what it takes to challenge the king.

Table of Contents

1: A Refresher on JSON – The People’s Champion

Before we can appreciate the challenger, we must first understand the champion. JSON is everywhere. But what exactly is it?

What is JSON?

Think of JSON as a simple set of grammar rules for writing down structured information. It’s like a digital filing cabinet where you can label folders and put documents inside them.

It uses a very human-readable structure based on key-value pairs.

- A key is like a label on a folder (e.g.,

"productName"). - A value is the content inside that folder (e.g.,

"ProLaptop 15").

These pairs are enclosed in curly braces {}. If you have a list of items, you can put them in square brackets [].

A Simple JSON Example

Let’s imagine we want to describe a product from an e-commerce store. In JSON, it would look like this:

{

"sku": "LPT-1234",

"productName": "ProLaptop 15",

"inStock": true,

"price": 1299.99

}This is clean, easy to read, and easy for both humans and machines to understand.

Why Did JSON Become So Popular?

JSON’s dominance is no accident. Several factors contributed to its widespread adoption:

- Human-Readable: Its syntax is simple and textual, making it easy for developers to read, write, and debug.

- Lightweight: Compared to older formats like XML, it’s far less verbose, meaning smaller file sizes and faster transmission over networks.

- Language Agnostic: Though it originates from JavaScript, parsers for JSON exist for virtually every programming language.

- Native Browser Support: Web browsers have built-in functions to work with JSON, making it the natural choice for web APIs.

For years, these qualities made JSON the perfect default for data exchange, especially between a server and a user’s web browser.

2: The AI Bottleneck – The “Token Tax” of JSON

The world is changing. The primary consumer of data is no longer just a web browser. It’s increasingly an AI model. And for AI, JSON’s strengths can turn into weaknesses. This introduces a new problem developers are now calling the “token tax.”

The Problem of Tokens

To understand the core issue, we need to understand how AI models, particularly LLMs like GPT-4, “read.” They don’t see text as we do. They see it as a stream of tokens.

- A token is a common sequence of characters. A word might be one token, or it might be broken into several.

- For example, the word “unhappiness” might be broken into tokens like “un”, “happi”, and “ness”.

- AI models have a limited “context window,” which is the maximum number of tokens they can process at once (e.g., 128,000 tokens).

This limit makes every single token count. And this is where JSON starts to feel expensive.

A Real-World Look at the Token Tax

Let’s take a real example. Imagine you’re building an app that sends product data to an LLM for analysis. In JSON, it might look like this:

{

"products": [

{ "sku": "LPT-1234", "productName": "ProLaptop 15", "inStock": true, "price": 1299.99 },

{ "sku": "MSC-5678", "productName": "Wireless Mouse", "inStock": false, "price": 25.50 },

{ "sku": "KBD-9101", "productName": "Mechanical Keyboard", "inStock": true, "price": 75.00 }

]

}This simple, readable JSON snippet consumes a surprising 257 tokens. The actual data is minimal, but all the quotes, commas, colons, and repeated keys ("sku", "productName", "inStock", "price") are what’s really costing you.

For this small example, the savings might seem trivial. But scale that to hundreds of API calls with thousands of records, and suddenly you’re looking at real, significant cost reductions. This is the token tax in action, and it’s a problem that TOON is designed to solve.

3: Enter TOON – The AI-Native Challenger

This is where TOON (Token-Oriented Object Notation) enters the scene. It’s a format designed with one primary goal: to be as efficient as possible for AI models to process, slashing that token tax.

What is TOON?

TOON is a data serialization format that prioritizes token efficiency and parsing speed over human readability. Its structure is designed to map more directly to the way AI models think.

The name itself, Token-Oriented, is the key. It means the format’s structure is built around minimizing the number of tokens required to represent complex data.

A Simple TOON Example

Let’s represent that same product data from our JSON example in TOON. The difference is immediate:

products[3]{sku,productName,inStock,price}:

LPT-1234,ProLaptop 15,true,1299.99

MSC-5678,Wireless Mouse,false,25.50

KBD-9101,Mechanical Keyboard,true,75.00This TOON version uses just 166 tokens. That’s a 35% reduction right off the bat. It looks different, but it’s still structured and, once you learn the rules, quite logical.

4: The Core Advantages of TOON for AI

TOON’s design philosophy directly attacks the weaknesses of JSON when it comes to AI applications. Here are its key advantages.

1. Superior Token Efficiency

This is the number one benefit. By stripping out unnecessary characters and rethinking structure, TOON significantly reduces the token count.

Real-world benchmarks show incredible savings across different types of data:

| Dataset | JSON Tokens | TOON Tokens | Savings |

|---|---|---|---|

| GitHub Repos (100 records) | 15,145 | 8,745 | 42.3% |

| Analytics (180 days) | 10,977 | 4,507 | 58.9% |

| E-commerce Orders | 257 | 166 | 35.4% |

The sweet spot? Uniform tabular data — records with consistent schemas across many rows. The more repetitive your JSON keys, the more TOON can optimize.

2. The Power of Tabular Arrays

This is TOON’s most innovative feature. Imagine you’re filling out a form for a hundred products. Would you write ‘SKU:’, ‘Name:’, ‘Price:’ on every single form? Of course not. You’d make one form with the labels and then just fill in the data for each product.

This is the simple yet brilliant idea behind TOON’s Tabular Arrays.

- JSON’s Approach (repetitive):

json [ { "sku": "LPT-1234", "qty": 2, "price": 1299.99 }, { "sku": "MSC-5678", "qty": 1, "price": 25.50 } ] - TOON’s Approach (efficient):

[2]{sku,qty,price}: LPT-1234,2,1299.99 MSC-5678,1,25.50

The schema is declared once in the header{sku,qty,price}, then each row is just CSV-style values. This is where the most dramatic token savings happen.

3. Smarter Syntax for Fewer Tokens

TOON has several clever tricks to trim the fat.

- Smart Quoting: TOON only quotes strings when absolutely necessary.

hello world→ No quotes needed (inner spaces are fine)hello 👋 world→ No quotes (Unicode is safe)"hello, world"→ Quotes required (contains comma delimiter)" padded "→ Quotes required (leading/trailing spaces)

This minimal-quoting approach saves tokens while keeping the data unambiguous.

- Indentation Over Brackets: Like YAML, TOON uses indentation instead of curly braces for nested structures, which is cleaner and uses fewer characters.

- JSON:

json { "product": { "sku": "LPT-1234", "details": { "name": "ProLaptop 15" } } } - TOON:

product: sku: LPT-1234 details: name: ProLaptop 15

- JSON:

4. Improved LLM Comprehension

This is a surprising and crucial benefit. Token efficiency doesn’t matter if the LLM can’t understand the format. In benchmarks, TOON not only saved tokens but actually improved LLM accuracy.

Tests on different models (like GPT, Claude, Gemini) showed:

- TOON accuracy: 70.1%

- JSON accuracy: 65.4%

- Token reduction: 46.3%

Why does this happen? Because TOON provides explicit structure that helps the AI. For example, it includes the array length in brackets ([N]).

tags[3]: admin,ops,devThink of it like giving someone a checklist. By saying tags[3]:, you’re telling the AI, “Expect exactly three items here.” This small hint helps the model double-check its work, leading to fewer mistakes and more reliable results.

5: Head-to-Head Comparison: TOON vs. JSON

Let’s put these two formats side-by-side across several important criteria.

| Criterion | JSON (JavaScript Object Notation) | TOON (Token-Oriented Object Notation) |

|---|---|---|

| Primary Goal | Human-readable, general-purpose data exchange. | AI-efficient, high-performance data processing. |

| Human Readability | Excellent. Very easy for developers to read and write. | Good. More like YAML; indentation helps, but can be less familiar. |

| Token Efficiency | Low. Significant syntactic overhead consumes tokens. | Very High. Designed to minimize token usage (30-60% savings). |

| LLM Comprehension | Good. Models are trained on it extensively. | Excellent. Explicit structure can improve accuracy and reduce errors. |

| Parsing Speed | Good. Fast parsers exist, but still an extra step. | Excellent. Simpler syntax allows for extremely fast parsing. |

| Compactness | Fair. More verbose due to repeated keys and syntax. | Excellent. Very compact, especially with schema-awareness. |

| Ecosystem & Support | Massive. Ubiquitous support in all languages, tools, and databases. | Growing. New, but libraries and tools are emerging (e.g., npm package). |

| Best Use Case | Web APIs, configuration files, general data interchange. | AI model training data, AI-to-AI communication, high-throughput AI inference. |

This table makes the trade-off clear. You are essentially choosing between human-friendliness and ecosystem support (JSON) versus AI performance and efficiency (TOON).

6: Practical Use Cases: When to Use TOON?

TOON is not a replacement for JSON in every scenario. It’s a specialized tool. The best advice is: “Use JSON programmatically, convert to TOON for LLM input.”

Here’s a more detailed breakdown:

✅ Use TOON When:

- You are sending large datasets to LLMs (hundreds or thousands of records).

- You are working with uniform data structures (database query results, CSV exports, analytics).

- Token costs are a significant concern for your project.

- You’re making frequent LLM API calls with structured data.

❌ Stick With JSON When:

- You are building traditional REST APIs for browsers or other applications.

- You are storing data in databases or configuration files.

- You are working with deeply nested or highly non-uniform data.

- You need universal compatibility with existing tools and developer workflows.

Coding Example: A Conceptual Workflow

Let’s imagine a Python function that prepares product data for an AI model.

import json

# Assuming a hypothetical TOON library is installed

import toon

# This is our data, likely coming from a database

product_data = {

"products": [

{ "sku": "LPT-1234", "productName": "ProLaptop 15", "inStock": true, "price": 1299.99 },

{ "sku": "MSC-5678", "productName": "Wireless Mouse", "inStock": false, "price": 25.50 }

]

}

# Step 1: Work with the data in JSON (the universal format)

# This is easy for developers to manipulate.

json_output = json.dumps(product_data)

# print(json_output)

# '{"products": [{"sku": "LPT-1234", "productName": "ProLaptop 15", ...}, ...]}'

# Step 2: Convert to TOON just before sending to the AI

toon_output = toon.encode(product_data)

# print(toon_output)

# products[2]{sku,productName,inStock,price}:

# LPT-1234,ProLaptop 15,true,1299.99

# MSC-5678,Wireless Mouse,false,25.50

# Step 3: Send the `toon_output` string to the LLM API

# send_to_llm(api_endpoint, toon_output)This workflow shows how you can get the best of both worlds: developer-friendly JSON for your application and cost-effective TOON for your AI interactions.

7: The Challenges and The Road Ahead for TOON

While the benefits of TOON for AI are compelling, it’s not going to be an easy battle. JSON is an entrenched giant. Here are the hurdles TOON faces.

1. The Ecosystem Problem

This is the biggest challenge. JSON’s success is built on its ecosystem.

- Tools: Every IDE, text editor, and database has built-in support for JSON.

- Libraries: Every programming language has mature, robust, and secure libraries for working with JSON.

- Developer Knowledge: Every developer knows JSON. There’s no learning curve.

TOON is still new. While libraries (like the npm package @toon-format/toon) and CLI tools are emerging, building an ecosystem to rival JSON’s is a monumental task that requires widespread adoption.

2. The Human Factor

Developers are the ones who build and maintain these systems. We are the ones who debug the data when things go wrong.

- JSON’s readability makes debugging relatively easy. You can open a log file and understand the data at a glance.

- A compact, TOON-like string might be harder for a human to parse, making debugging a potential nightmare.

This trade-off between machine efficiency and developer experience is a critical one that teams will have to consider.

3. The Chicken-and-Egg Problem of Adoption

Why would a developer switch to TOON if no tools support it? Why would toolmakers build support for TOON if no developers are using it?

Breaking this cycle requires a major catalyst. Perhaps leading AI companies will start offering TOON-based APIs that are significantly faster or cheaper than their JSON counterparts. That might be the push needed to start the snowball rolling.

WrapUP: A Tale of Two Tools

The story of TOON vs. JSON is not about one format being “better” than the other in an absolute sense. It’s about using the right tool for the job.

JSON is the reliable, versatile, and universally understood workhorse. It will continue to be the dominant format for web development, configuration, and general-purpose data interchange for the foreseeable future. Its strength lies in its human-centric design and massive ecosystem.

TOON, on the other hand, is the specialized, high-performance instrument. It’s a race car built for a specific track: the AI superhighway. It sacrifices some human readability and ecosystem maturity for raw performance, token efficiency, and even improved AI comprehension. Its strength lies in its machine-centric design.

As AI becomes more deeply integrated into our digital infrastructure, the demands we place on our data formats will change. The bottlenecks created by JSON’s verbosity will become more pronounced. In those high-stakes, high-performance AI environments, a format like TOON isn’t just a nice-to-have; it’s a necessity.

The future of data is likely not a world where TOON completely replaces JSON. It’s more likely a hybrid world where developers and systems will choose the format that best suits the consumer of the data. If the consumer is a human or a web browser, they’ll choose JSON. If the consumer is an AI model, they will increasingly reach for the tool built just for it: TOON. The rise of AI may not dethrone the king, but it has certainly created a need for a powerful new specialist in the kingdom of data.

FAQs

What exactly is JSON, and why is it so popular?

Think of JSON as a universal language for writing down information. It’s like a digital filing cabinet that became the standard for the internet because it’s clean, easy for people to read, and every computer system understands it. It’s the go-to for sending data between a website and its server.

Okay, so what is this new ‘TOON’ format everyone’s talking about?

TOON is a new way of writing data, but it was built specifically for Artificial Intelligence. Its main goal isn’t to look pretty for humans, but to be as short and efficient as possible for AI models to read. Think of it as a shorthand version of data designed to save you money on AI costs.

What’s the big problem with using JSON for AI that TOON is trying to fix?

The main issue is the “token tax.” AI services charge you based on the number of “tokens” (pieces of words) they process. In JSON, a huge chunk of those tokens are just fluff—quotes, commas, and repeated labels like "name" or "email" for every single item. This fluff adds up, making your AI bill more expensive.

How does TOON actually save so much space? Can you give me a simple example?

Its biggest trick is for lists of similar items. Imagine a list of 100 employees. In JSON, you write "id", "name", "role" 100 times. In TOON, you write those column labels just once at the top, like a header row in a spreadsheet. Then, you just list the data rows underneath. This avoids repeating the same words over and over, cutting down the token count dramatically.

Is TOON better than JSON? Should I switch everything over?

Not necessarily “better,” just “different.” It’s like having a race car and a family minivan. You wouldn’t use the race car for a grocery run, and you wouldn’t take the minivan to a race track. They’re built for different jobs. JSON is the minivan—great for everyday use. TOON is the race car—built for high-speed AI tasks.

So, when is it still a good idea to stick with JSON?

You should definitely stick with JSON for most traditional tasks. This includes building websites, creating configuration files, or storing data in a database. Basically, if humans need to read and edit the data often, or if you need your tools to work with it everywhere, JSON is still the champion.

And when would it be smart to start using TOON?

TOON is the smart choice when your main audience is an AI model. This is perfect for situations like sending huge datasets to an AI for analysis, or when you’re making thousands of

If TOON is so compact, is it confusing for AI models to understand?

Surprisingly, no! In fact, some tests show AI models understand TOON better than JSON. Because TOON’s structure is so explicit (for example, it might say users[5]: to show there are exactly 5 users), it gives the AI helpful clues. This helps the AI double-check its work and actually make fewer mistakes.

What’s the biggest catch or downside to using TOON right now?

The biggest challenge is that it’s the new kid on the block. JSON has decades of support—every tool, programming language, and developer knows it. TOON is still building its ecosystem. There aren’t as many tools available yet, and fewer developers are familiar with it, which can make debugging a bit harder.

Do you think TOON will eventually make JSON obsolete?

Probably not. It’s more likely they will coexist and be used for different things. The future will probably involve developers using JSON within their own applications because it’s easy to work with, and then converting that data to TOON right before sending it to an AI to save on costs and improve performance. They’ll be partners, not replacements.